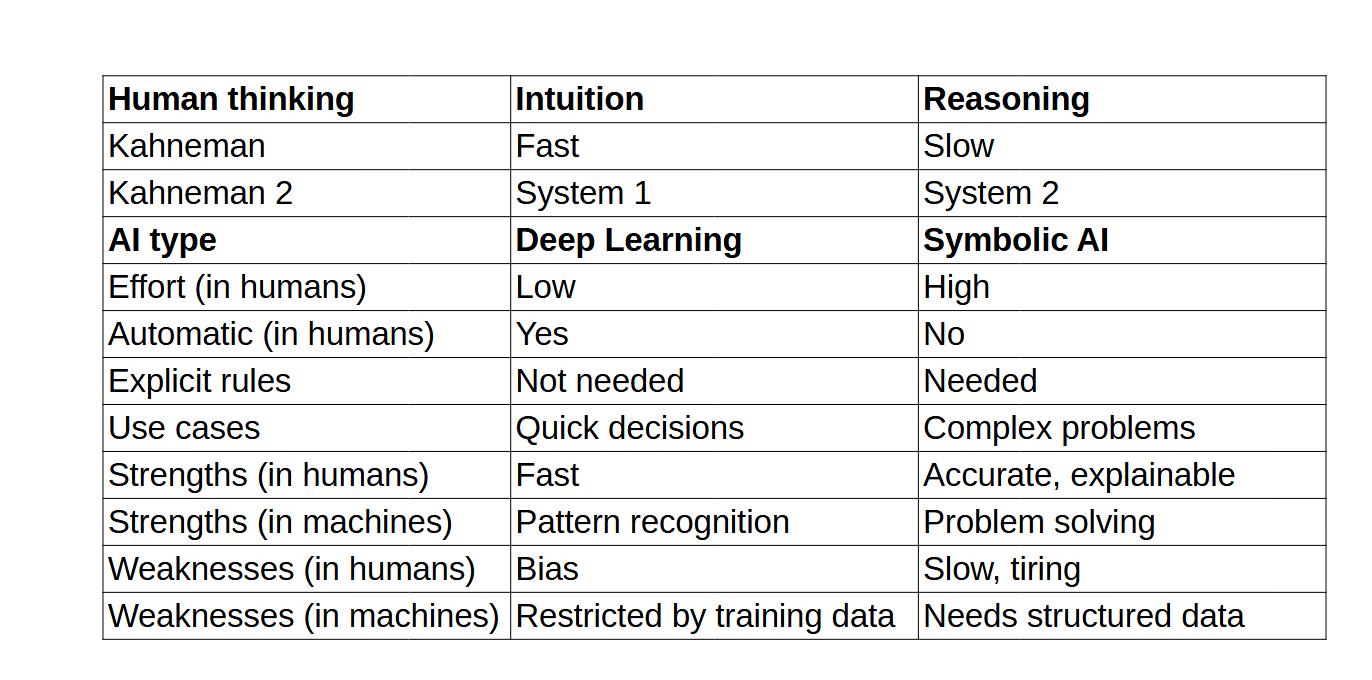

In his 2011 book Thinking, Fast and Slow, Daniel Kahneman described two types of thinking. Fast thinking is human intuition, which he also called System 1. It is quick, automatic, effortless, and enables us to make predictions and decisions quickly without explicit rules. It is good for everyday tasks, and for tasks essential to survival, like recognizing faces, understanding simple language, and detecting threats.

Unfortunately, intuition is also prone to bias, error, and makes us jump to conclusions. Long passages of Kahneman’s book are devoted to describing the many cognitive biases which afflict humans. When we rely on intution, our true motivations are often obscured, even from ourselves.

The slow thinking in Kahneman’s title is reasoning, which he also calls System 2 thinking. It is deliberate, analytical, and requires effort. It is accurate when given enough time and attention, and allows us to solve complex problems. It is transparent and explainable.

Since the birth of AI as a science in a summer conference at Dartmouth College in New Hampshire in 1956, there have been two approaches to AI, and these approaches map onto Kahneman’s categories of fast and slow thinking.

Artificial neural networks, which we know today as deep learning, are like Kahneman’s System 1 thinking – fast, or intuitive thinking. They require training on large datasets, and are good for image recognition, language generation, and game-playing. Artificial neural networks are susceptible to human-derived bias in their data sets, and they struggle to generalise outside their training data.

As a type of artificial neural network, neuromorphic computing falls into the System 1 category.

Symbolic AI, which also became known as good old-fashioned AI, or GOFAI, is like Kahneman’s System 2 thinking – slow thinking, or reasoning. It is good for solving complex problems and interpreting data. The reasoning process can be retraced and explained. It requires explicit rules and structured input data.

Some experts have argued for years that human-level AI will require these two types of thinking to be combined, as they are in humans. This combination is sometimes called Neurosymbolic AI.

Although the science of AI got started in 1956, it rarely troubled the mainstream media until 2012, when Geoff Hinton and some colleagues figured out a way to get artificial neural networks to function well. This became known as deep learning, which gave us the miracles we use daily on our smart phones, like search, maps, image recognition, and translation.

2012 was the first AI Big Bang, and the second AI Big Bang happened when some Google researchers published a paper called Attention is All You Need, which introduced a type of neural networks called transformer AIs. These gave us large language models, like GPT-4, Claue, Gemini, Llama, and so on.

Maybe the third AI Big Bang will be a breakthrough with either neuromorphic or neurosymbolic AI – and it might happen soon.